What is Performance Testing: Types, Benefits and Steps to Perform

Table Of Content

Published Date :

18 Jun 2025

Every software is created with a number of features and functionalities for end users. But even the most valuable new software still needs to prove itself in more reliable, resource-efficient, and scalable ways. If any software is released without testing and fails to perform as per users' expectations, it may damage the company's brand reputation. That's why performance testing becomes essential to remove the bottlenecks that can badly affect the performance of a software solution.

What is Performance Testing?

As the name suggests, performance testing is a type of software testing that evaluates the performance, efficiency, and scalability of a solution or system. It identifies loopholes and measures the performance of any software under different conditions to ensure it works perfectly every time and can handle the required number of users.

Performance testing allows you to check whether a solution meets the required service and user experience level. It can also highlight areas where the development team needs to improve code, speed, stability, and other parameters before finalizing the software's deployment or release.

Performance Testing That Powers Your Tech!

DITS helps your software solutions stay agile and scalable, even under pressure. Get reliable load handling from the first click to the last.

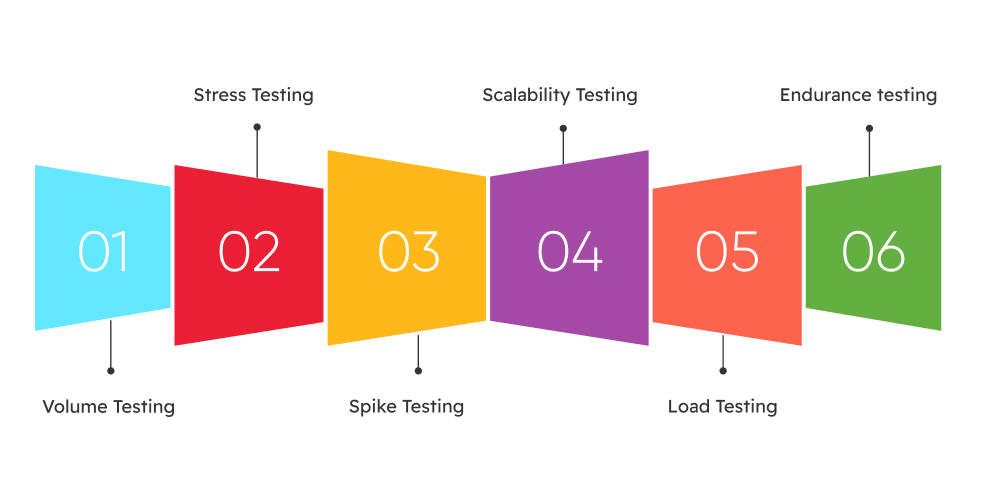

Types of Performance Testing

Testing teams perform various tests depending on different circumstances to get a true feel of how the application will perform once it becomes live for end users. Some of the most common types of performance testing include:

Volume Testing

In volume testing, a large amount of data is stored in the database and testers test the behavior of the entire software system. Here, testers check the application's capacity for handling large database sizes. They observe how it goes about its process while varying the quantum of data fed into the database.

Stress Testing

Stress testing is the process to test a software system's ability to handle high loads that are more than standard usage levels. Testers put the application under extreme pressure in terms of traffic or heavy data processing to see how well it withstands high loads. It helps determine the breaking point and any possible issues that may show up under high load situations. The goal is to detect the breaking point of software solution.

Spike Testing

Spike testing is a type of performance testing in which the testing team checks the ability of a system to handle sudden traffic spikes. It helps them detect potential issues that might show up when the system experiences sudden high number of requests. This helps testers test the reaction of software to sudden spikes in load that are generated due to large number of users at a time.

Scalability Testing

Scalability testing helps to identify performance bottlenecks for any software solution. For example, it can help you determine how many virtual users can it support if you add another application server or allocate more CPU to the database server. Scalability testing determines whether the application can scale effectively when user overload is experienced. It is hence an essential tool in forecasting enhancements for your application and understand the system's ability to handle higher loads.

Load Testing

Load testing is done to simulate the maximum use of a solution by testing, how it performs under a certain number of virtual users performing transactions over a specific time interval. Here, testers try to identify the performance bottlenecks or verify the application's ability to work under the expected number of users. For this, they analyze the number of users that will have access to the system and the operations to be executed.

Endurance testing

Endurance testing focuses on testing the behaviour of the system under a certain load for a long period. It is performed to check whether the solution can handle the expected load over a prolonged time period. The goal is to find out issues that might show up from extended usage of the software such as memory leaks. Endurance testing also helps testers predict how a system will behave in the future when used for long periods.

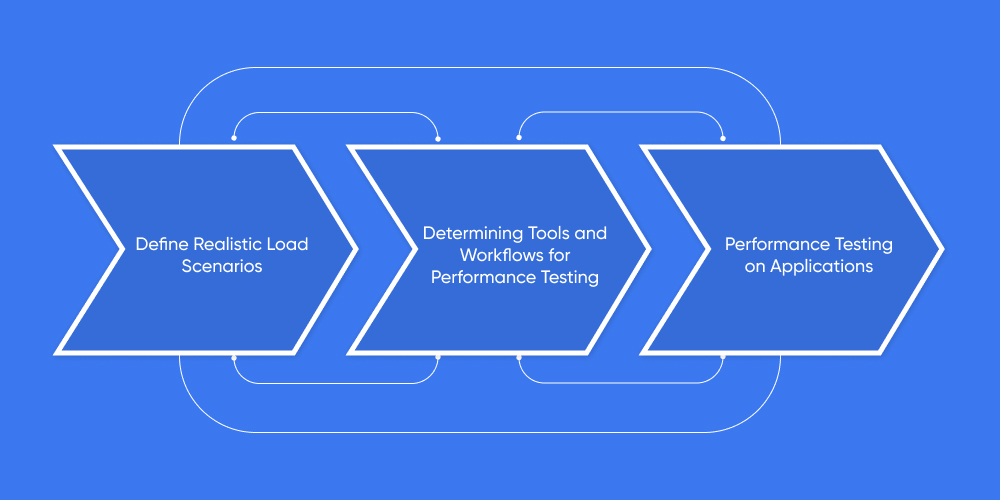

Challenges in Performance Testing

Testing the performance of any software is not as simple as it seems. Testers face multiple challenges when testing different scenarios and applications, which need to be addressed to get accurate results. Here are the common challenges that testers face while testing any software or application.

Define Realistic Load Scenarios

There can be multiple ways to use a solution. Predicting and simulating possible actions requires understanding user behavior, the anticipated user load behavior, and the network bandwidth. Working with an experienced testing service provider, like Ditstek Innovations, can significantly improve and optimize performance testing efforts.

Determining Tools and Workflows for Performance Testing

Defining the right tools and workflows for performance testing is also difficult. Many tools have a variety of applications (such as simulating many users and finding bottlenecks), and it is important to use a tool that fits a development team’s skillset and workflow.

Performance Testing on Applications

Performance testing for mobile applications has unique aspects (i.e., optimizing app testing processes for a better user experience). When performing performance testing, an app must consider that the app is working well on various devices with unknown hardware capabilities, operating systems, and battery discharges in varying states. Therefore, the quality of the network (e.g., Wi-Fi vs cellular) and the battery can positively or negatively impact the performance of the applications in many scenarios.

Lower Costs, Improve Efficiency, DITS Makes Performance Testing Worth Every Penny!

Better performance = fewer bugs, happier users, and lower infrastructure costs with our performance testing.

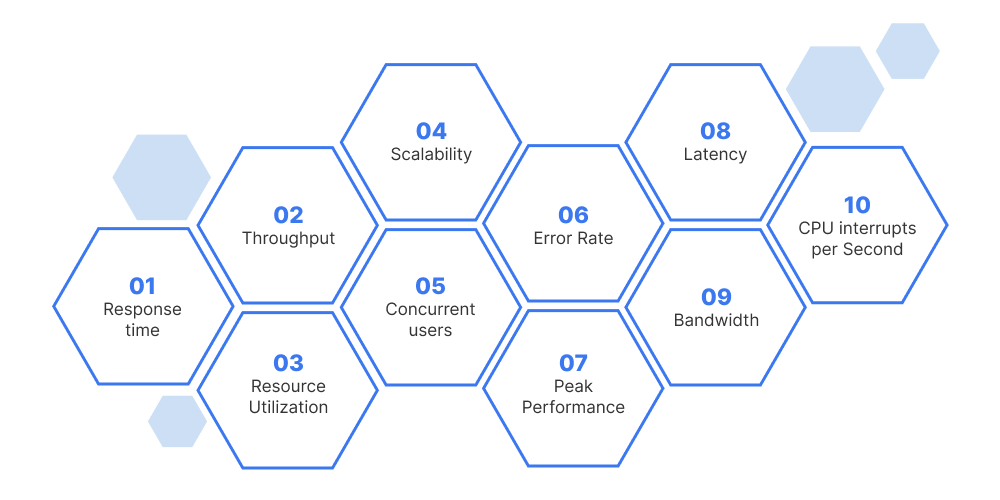

Key Metrics to Consider in Performance Testing

Performance testing focuses on analyzing the system behavior under different conditions, whereas its success depends on tracking specific metrics or KPIs. These metrics help the testers to detect bottlenecks of the system, consider the readiness for production, and prepare for optimization.

Core Metrics to Monitor

Response time: Time lag between the instant a user command is given, and the system reacts to it.

Throughput: Number of transactions processed or units of data processed per second.

Resource Utilization: Usage behavior of resources like CPU, memory, disk, and network.

Scalability: The capability of maintaining performance with increasing user load.

Concurrent users: The maximum number of users who can simultaneously use this system.

Error Rate: Number of transactions that have failed or any system-level errors.

Peak Performance: The highest performance value under the maximum load.

Latency: The time-delay imposed between the user's request for some service and initiation of a system response.

Bandwidth: The quantity of data transferred across the network in one second.

CPU interrupts per Second: The number of hardware interrupts that occur during processing.

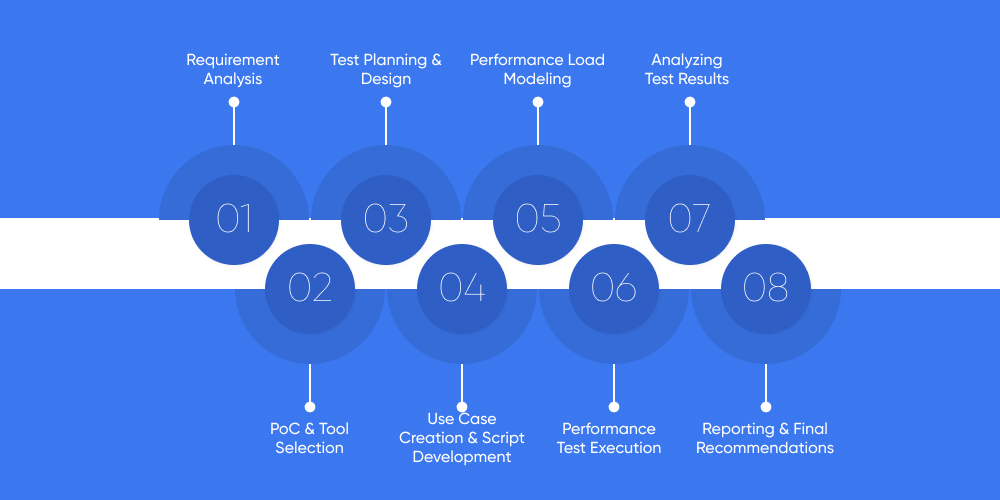

Step-By-Step Process for Performance Testing

While the purpose of performance testing remains fixed in that it tries to ensure that an application can handle expected workloads smoothly, the solution varies slightly from project to project. Here is a simplified explanation of a typical performance testing process.

1. Requirement Analysis

Every process in software development starts with the analysis of requirements. The QA team gathers the technical and business requirements with the key stakeholders. They look deeper into system architecture, hardware and software requirements, the application's functionality, the types of users expected to use it, expected traffic on the application level, and its database design and technology stack. This step sets the basis for everything that will follow.

2. PoC & Tool Selection

The next step is to identify core application functionality and select appropriate testing tools. The choice of a tool depends on factors such as licensing cost, supported protocols, and compatibility with the application's technology stack. The PoC should be very basic and run with a small group of virtual users between 10 and 15 to confirm the tool's suitability and the script's stability.

3. Test Planning & Design

Okay, so once the PoC gets the green light, the QA folks can do proper planning. They basically take everything they've learned so far, what worked and what didn't, and create a test plan. We're talking about mapping out the test environment to determine hardware requirements, sorting workloads, and nailing down all the little details.

4. Use Case Creation & Script Development

Now comes the fun part: breaking down exactly what the app is supposed to do and turning those into real test use cases. Once everyone's on board, the team goes into script-writing mode using a performance tool. Oh, and it's not just record-and-done. They focus on the scripts with all parameterization, correlation, and custom logic so the tests don't fail at runtime.

5. Performance Load Modeling

In this step, the QA crew builds out a performance load model, which is basically a shadow army of users clicking buttons and messing things up (like humans do). However, they need to keep it realistic, as they want to see if the system can actually keep up when people start piling in.

6. Performance Test Execution

When everything is ready, it's time for the performance test to be executed. They fire up the Performance Center, Controller, or whatever tool is in play and start pushing the load. Not all at once, though. They ramp it up bit by bit. Maybe 10 users, then 20, then 50, 100, or more. Meanwhile, everyone looks into the metrics, just waiting for something to break (and it usually does).

7. Analyzing Test Results

After every test run, the team investigates the data. What went wrong? They check the response times and any errors. They compare results, label everything, and prepare reports with all the graphs and stats. However, getting the entire performance and results usually takes a few rounds.

8. Reporting & Final Recommendations

Once the tests are analyzed, the testing team creates a final report. The final report is supposed to make sense to the development team and anyone else who needs to act on it. What worked, what didn't, and what still needs to be fixed, with clear recommendations. The idea is to make it easy and simple for the developers to know where to start and what to do.

Supercharge Your Software with Our Performance Testing Services!

Ensure your apps stay fast, stable, and stress-free under any load - DITS brings real power to performance.

How DITS can help?

Every step in performance testing is crucial for the solution's success and a satisfactory user experience. That is why it needs to be done by a company with an experienced testing team like Ditstek Innovations. From requirement analysis to analyzing the results and creating final report and recommendations, we pay attention to every minor detail involved in the process to ensure, the solution is tested for every possible condition such as heavy load and traffic. That’s why we stand out among as one of the best software development companies in Canada with 100% client satisfaction.

We not only test software solution, but also offers ongoing maintenance and support to ensure the product continues to work and serve your business needs. We create solution to help you minimize costs, improve operational efficiency and boost profits for your business.

Benefits of Performance Testing for Businesses

Now as we know the steps to performance testing, let us explore some of the benefits for which organizations conduct performance testing of software solutions.

Improved End-User Experience

The main thing for your users is a smooth and responsive app good performance will keep your users engaged. Performance testing allows you to catch the slow interactions and bugs, resulting in a quicker, smoother experience for your end-users. It's important to know that the majority of smartphone users will continue to use an application, only if they have a positive experience.

Increased Conversion Rates

A smooth experience leads to higher conversion rates. If users experience no disruptions, they are more likely to successfully complete the desired action, like making a purchase or signing up for a service.

Increased Scalability and Reliability

Performance testing gauges how your system responds to various levels of user activity. This process identifies possible issues, such as slow system back-end databases, overloaded server, slower API responses, and front-end problems that can require an optimization of loading resources. Correctly identifying these problems as they arise is essential to ensure your software is prepared for increased demand and provides reliable performance.

Minimized Infrastructure Cost

Performance testing helps eliminate bottlenecks in performance to improve your infrastructure. This ensures that you're not overspending on hardware, software, and cloud services.

More Efficient Development Practices

Performance testing is best to do in the initial development cycle. This allows teams to detect and correct performance problems immediately. By creating a more effective development process, your team is likely to create a faster and more effective application overall.

Why Choose DITS for Performance Testing

At DITS, we have been testing a variety of software solutions for almost a decade. Apart from software development, we have a dedicated testing team with expertise and experience in testing different types of software. We have clients across the globe with a 99% satisfaction rate.

Also, we offer custom software development services to various industries but not limited to, transportation, Iot, education, healthcare, fintech, retail, AI, and real estate. We have a 97% client retention rate, and we also offer software integration and ongoing maintenance services.

With almost a decade of experience in software development and testing, DITS is one of the best custom software development companies in Canada you can rely on. If you are looking for performance testing, you can visit our website and fill the “Contact Us” form, and our team will get back to you shortly.

Need Help With Performance Testing Setup?

Talk to our specialists about streamlining your testing process, tools, and strategy. We'll guide you through every step from planning to flawless execution.

Conclusion

Performance testing is not an option; it is actually an investment in the success of your software. By knowing the different types of testing, including load testing, stress testing, scalability testing, and endurance testing, and following the steps we have set out, organizations can identify and prevent bottlenecks before they happen. This means not only that your applications work but that they work fast, are stable, and can handle user demands. All things considered, great performance testing means happy users and happy users mean no costly downtime and the protection of your brand in an aggressive digital world. From development to deployment, consider it wise and worthwhile to consider performance, and you will have success in offering the best user experiences.

FAQs

Who performs performance testing?

Performance testing is usually performed by performance engineers, QA teams, or test specialists. Usually, they have the requisite training on the performance testing tools and processes necessary to define, run, and assess the results of the performance tests.

What tools are used in performance testing?

Performance testing tools are Apache JMeter, LoadRunner, NeoLoad, k6. Primarily, the tools chosen are based on the technology stack of the application, cost, and other related testing criteria.

What are the most important performance testing metrics?

The largest metrics from performance testing include response time, throughput, transactions per second (TPS), error rate, resource utilization (CPU, memory), and latency. Each of these metrics is measured with the perspective of degradation (speed, stability) of the application while a load is being applied.

What is the difference between performance testing and functional testing?

Performance testing is to evaluate how well a system performs under load (speed, stability) versus functional testing that evaluates whether the system features/functionality deliver as designed, in alignment with requirements. In this regard, performance testing and functional testing are aligned (complementary) but have generally different objectives.

Can performance testing be automated?

Performance testing is generally automated, especially in terms of execution, and to a large degree, as a result, performance testing can be automated. Tools that will allow for automation provide repeatability and consistency, which is important for isolating performance regressions as well as integrating testing into a continuous integration pipeline.

Dinesh Thakur

21+ years of IT software development experience in different domains like Business Automation, Healthcare, Retail, Workflow automation, Transportation and logistics, Compliance, Risk Mitigation, POS, etc. Hands-on experience in dealing with overseas clients and providing them with an apt solution to their business needs.

Recent Posts

Discover how bespoke MVP development services help Canadian companies reduce product risk, validate ideas faster, and build scalable products with confidence.

Quality management system software helps small businesses proactively manage compliance, reduce operational risk, improve audit readiness, and build scalable, resilient quality processes across regulated environments.

Simplify stock control with inventory management software designed for small manufacturing businesses. Reduce errors and improve planning.